There’s a reason Perforce Helix Core is used by 19 of the top 20 AAA game studios around the globe. In an 8k world filled with experiences that are more immersive, hyper-realistic, and visually-stunning than ever before, Perforce Helix Core continues to be the only version control system that reliably handles large asset files like game art, textures, levels, etc.

However, ever-increasing file sizes are amplifying one of the core challenges of 21st-century game development: the difficulty of collaborating on large files from remote/home offices or with teammates located around the globe. To meet those requirements, solutions like Helix Core Edge and Forwarding Replicas were developed.

Distributed development has become the new normal of game development, as demonstrated by the growth of conferences like the External Development Summit (XDS), the success of specialized art, animation, and visual effects studios like Lakshya Digital, and new models for production pipelines that accommodate more dynamic relationships between studios, publishers, and external partners. As we head into the new decade, the problem of handling large files across long distances is only going to intensify.

Luckily, Perforce Helix Core comes with a number of features designed to address the problem of multi-site replication. Perforce launched federated services as early as version 2008.1 to promote “better performance for users at remote sites, reduce bandwidth requirements for installations separated by low bandwidth and/or high latency connections, and reduce the load on central servers.”

Through successive updates, the Perforce team has introduced more and more specialized server types and wrap-around services to facilitate easier collaboration across sites. At the time of this writing, Helix Core supports various types of replicated servers, such as:

In this post, we will cover the top five pros and cons between two of the most popular replica types used to facilitate multi-site distributed Perforce topologies: commit-edge server pairs, and forwarding replicas.

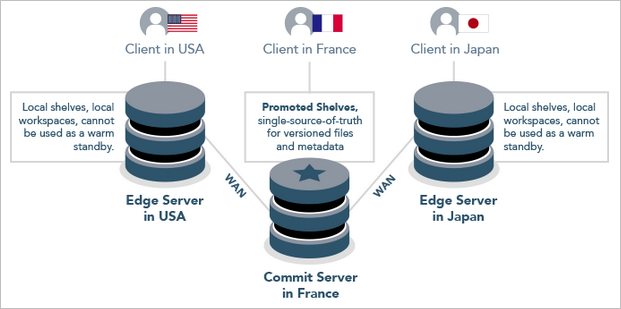

Commit-edge architecture was first released in Perforce 2013.2. Unlike more traditional replication, the distinguishing feature of commit-edge architecture is that certain data manipulated on an edge server never actually needs to be replicated upstream to the commit server. By handling the most commands without needing to ping the central server of any distributed Helix Core server endpoint, edge servers offer the best overall performance of distributed Perforce system types (as long as they are geographically located close to the teams connecting to them).

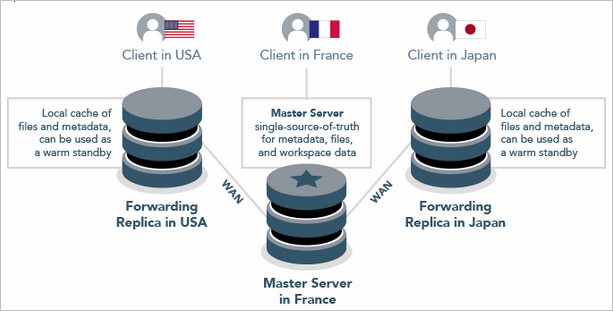

Although basic replicas were available in Perforce Helix Core as far back as version 2009.2, forwarding replicas were not introduced until version 2012.1. Forwarding replicas contain both versioned files and file metadata, which allows them to service common read-only commands without introducing as much latency or increasing server load on the master (target) server. When a command is submitted to a forwarding replica that would attempt to change file contents or metadata, the operation is simply forwarded to the master server for completion and the response is automatically relayed back to the replica.

Ultimately, deciding which solution best fits your team’s requirements is a balancing act between many unique characteristics of your team’s project, workflow, resources. Most of the studios on distributed Assembla Perforce Single Tenant Cloud solutions have found managed forwarding replicas in the cloud to deliver good performance, although the Assembla P4 DevOps team has also set up commit-edge topologies for teams with more sophisticated personnel footprints, workflows, or requirements.

Whether you choose to implement a commit-edge or forwarding replica system for your distributed Perforce development, both solutions provide significant benefits and performance improvements for globally-distributed teams.

Have any questions or tips you’d give teams interested in setting up a distributed Perforce system based on your experience? Leave a comment below!

And if you would like any advice about which solution might best fit your particular team’s particular needs or want to learn more about Assembla’s managed cloud Perforce solutions, please don’t hesitate to reach out to support@assembla.com!